目录

目录

- Introduction

- Prompt engineering

- LLM output configuration

- Output length

- Sampling controls

- Temperature

- Top-K and top-P.

- Putting it all together

- Prompting techniques

- General prompting / zero shot

- One-shot & few-shot

- System, contextual and role prompting

- System prompting

- Role prompting

- Contextual prompting

- Step-back prompting

- Chain of Thought (CoT)

- Self-consistency

- Tree of Thoughts (ToT)

- ReAct (reason & act)

- Automatic Prompt Engineering (APE)

- Code prompting

- Prompts for writing code

- Prompts for explaining code

- Prompts for translating code

- Prompts for debugging and reviewing code

- What about multimodal prompting?

- Best Practices

- Provide examples

- Be concise

- Be specific about the output

- Use Instructions over Constraints

- Control the max token length

- Use variables in prompts

- Experiment with input formats and writing styles

- For few-shot prompting with classification tasks, mix up the classes

- Adapt to model updates

- Experiment with output formats

- JSON Repair

- Working with Schemas

- Experiment together with other prompt engineers

- CoT Best practices

- Document the various prompt attempts

- Summary

- Endnotes

You don't need to be a data scientist or a machine learning engineer — everyone can write a prompt.

Introduction

When thinking about a large language model input and output, a text prompt (sometimes accompanied by other modalities such as image prompts) is the input the model uses to predict a specific output. You don’t need to be a data scientist or a machine learning engineer ~ everyone can write a prompt. However, crafting the most effective prompt can be complicated. Many aspects of your prompt affect its efficacy: the model you use, the model's training data, the model configurations, your word-choice, style and tone, structure, and context all matter. Therefore, prompt engineering is an iterative process. Inadequate prompts can lead to ambiguous, inaccurate responses, and can hinder the model's ability to provide meaningful output.

When you chat with the Gemini chatbot,¹ you basically write prompts, however this whitepaper focuses on writing prompts for the Gemini model within Vertex AI or by using the API, because by prompting the model directly you will have access to the configuration such as temperature etc.

This whitepaper discusses prompt engineering in detail. We will look into the various prompting techniques to help you getting started and share tips and best practices to become a prompting expert. We will also discuss some of the challenges you can face while crafting prompts.

Prompt engineering

Remember how an LLM works; it’s a prediction engine. The model takes sequential text as an input and then predicts what the following token should be, based on the data it was trained on. The LLM is operationalized to do this over and over again, adding the previously predicted token to the end of the sequential text for predicting the following token. The next token prediction is based on the relationship between what's in the previous tokens and what the LLM has seen during its training.

When you write a prompt, you are attempting to set up the LLM to predict the right sequence of tokens. Prompt engineering is the process of designing high-quality prompts that guide LLMs to produce accurate outputs. This process involves tinkering to find the best prompt, optimizing prompt length, and evaluating a prompt’s writing style and structure in relation to the task. In the context of natural language processing and LLMs, a prompt is an input provided to the model to generate a response or prediction.

These prompts can be used to achieve various kinds of understanding and generation tasks such as text summarization, information extraction, question and answering, text classification, language or code translation, code generation, and code documentation or reasoning.

Please feel free to refer to Google's prompting guides² ³ with simple and effective prompting examples.

When prompt engineering, you will start by choosing a model. Prompts might need to be optimized for your specific model, regardless of whether you use Gemini language models in Vertex AI, GPT, Claude, or an open source model like Gemma or LLaMA.

Besides the prompt, you will also need to tinker with the various configurations of a LLM.

LLM output configuration

Once you choose your model you will need to figure out the model configuration. Most LLMs come with various configuration options that control the LLM’s output. Effective prompt engineering requires setting these configurations optimally for your task.

Output length

An important configuration setting is the number of tokens to generate in a response. Generating more tokens requires more computation from the LLM, leading to higher energy consumption, potentially slower response times, and higher costs.

Reducing the output length of the LLM doesn’t cause the LLM to become more stylistically or textually succinct in the output it creates, it just causes the LLM to stop predicting more tokens once the limit is reached. If your needs require a short output length, you'll also possibly need to engineer your prompt to accommodate.

Output length restriction is especially important for some LLM prompting techniques, like ReAct, where the LLM will keep emitting useless tokens after the response you want.

Be aware, generating more tokens requires more computation from the LLM, leading to higher energy consumption and potentially slower response times, which leads to higher costs.

Sampling controls

LLMs do not formally predict a single token. Rather, LLMs predict probabilities for what the next token could be, with each token in the LLM’s vocabulary getting a probability. Those token probabilities are then sampled to determine what the next produced token will be. Temperature, top-K, and top-P are the most common configuration settings that determine how predicted token probabilities are processed to choose a single output token.⁴

Temperature

Temperature controls the degree of randomness in token selection. Lower temperatures are good for prompts that expect a more deterministic response, while higher temperatures can lead to more diverse or unexpected results. A temperature of 0 (greedy decoding) is deterministic: the highest probability token is always selected (though note that if two tokens have the same highest predicted probability, depending on how tiebreaking is implemented you may not always get the same output with temperature 0).

Temperatures close to the max tend to create more random output. And as temperature gets higher and higher, all tokens become equally likely to be the next predicted token.

The Gemini temperature control can be understood in a similar way to the softmax function used in machine learning. A low temperature setting mirrors a low softmax temperature (T), emphasizing a single, preferred temperature with high certainty. A higher Gemini temperature setting is like a high softmax temperature, making a wider range of temperatures around the selected setting more acceptable. This increased uncertainty accommodates scenarios where a rigid, precise temperature may not be essential like for example when experimenting with creative outputs.

Top-K and top-P.

Top-K and top-P (also known as nucleus sampling)⁴ are two sampling settings used in LLMs to restrict the predicted next token to come from tokens with the top predicted probabilities. Like temperature, these sampling settings control the randomness and diversity of generated text.

- Top-K sampling selects the top K most likely tokens from the model's predicted distribution. The higher top-k, the more creative and varied the model's output; the lower top-K, the more restive and factual the model's output. A top-K of

1is equivalent to greedy decoding. - Top-P sampling selects the top tokens whose cumulative probability does not exceed a certain value (

P). Values for P range from0(greedy decoding) to1(all tokens in the LLM's vocabulary).

The best way to choose between top-K and top-P is to experiment with both methods (or both together) and see which one produces the results you are looking for.

Putting it all together

Choosing between top-K, top-P, temperature, and the number of tokens to generate, depends on the specific application and desired outcome, and the settings all impact one another. It’s also important to make sure you understand how your chosen model combines the different sampling settings together.

If temperature, top-K, and top-P are all available (as in Vertex Studio), tokens that meet both the top-K and top-P criteria are candidates for the next predicted token, and then temperature is applied to sample from the tokens that passed the top-K and top-P criteria. If only top-K or top-P is available, the behavior is the same but only the one top-K or P setting is used.

If temperature is not available, whatever tokens meet the top-K and/or top-P criteria are then randomly selected from to produce a single next predicted token.

At extreme settings of one sampling configuration value, that one sampling setting either cancels out other configuration settings or becomes irrelevant.

- If you set

temperatureto0,top-Kandtop-Pbecome irrelevant-the most probable token becomes the next token predicted. if you settemperatureextremely high (above1-generally into the10s),temperaturebecomes irrelevant and whatever tokens make it through thetop-Kand/ortop-Pcriteria are then randomly sampled to choose a next predicted token. - If you set

top-Kto1,temperatureandtop-Pbecome irrelevant. Only one token passes thetop-Kcriteria, and that token is the next predicted token. If you settop-Kextremely high, like to the size of the LLM’s vocabulary, any token with a nonzero probability of being the next token will meet thetop-Kcriteria and none are selected out. - If you set

top-Pto0(or a very small value), most LLM sampling implementations will then only consider the most probable token to meet thetop-Pcriteria, makingtemperatureandtop-Kirrelevant. If you settop-Pto1, any token with a nonzero probability of being the next token will meet thetop-Pcriteria, and none are selected out.

As a general starting point, a temperature of .2, top-P of .95, and top-K of 30 will give you relatively coherent results that can be creative but not excessively so. If you want especially creative results, try starting with a temperature of .9, top-P of .99, and top-K of 40. And if you want less creative results, try starting with a temperature of .1, top-P of .9, and top-K of 20. Finally, if your task always has a single correct answer (e.g., answering a math problem), start with a temperature of 0.

NOTE: With more freedom (higher temperature, top-k, top-P, and output tokens), the LLM might generate text that is less relevant.

WARNING: Have you ever seen a response ending with a large amount of filler words? This is also known as the “repetition loop bug", which is a common issue in Large Language Models where the model gets stuck in a cycle, repeatedly generating the same (filler) word, phrase, or sentence structure, often exacerbated by inappropriate temperature and

top-k/top-psettings. This can occur at both low and high temperature settings, though for different reasons. At low temperatures, the model becomes overly deterministic, sticking rigidly to the highest probability path, which can lead to a loop if that path revisits previously generated text. Conversely, at high temperatures, the model's output becomes excessively random, increasing the probability that a randomly chosen word or phrase will, by chance, lead back to a prior state, creating a loop due to the vast number of available options. In both cases, the model's sampling process gets “stuck,” resulting in monotonous and unhelpful output until the output window is filled. Solving this often requires careful tinkering with temperature andtop-k/top-pvalues to find the optimal balance between determinism and randomness.

Prompting techniques

LLMs are tuned to follow instructions and are trained on large amounts of data so they can understand a prompt and generate an answer. But LLMs aren’t perfect; the clearer your prompt text, the better it is for the LLM to predict the next likely text. Additionally, specific techniques that take advantage of how LLMs are trained and how LLMs work will help you get the relevant results from LLMs

Now that we understand what prompt engineering is and what it takes, let's dive into some examples of the most important prompting techniques.

General prompting / zero shot

A zero-shot⁵ prompt is the simplest type of prompt. It only provides a description of a task and some text for the LLM to get started with. This input could be anything: a question, a start of a story, or instructions. The name zero-shot stands for ‘no examples’.

Let's use Vertex AI Studio (for Language) in Vertex AI,⁶ which provides a playground to test prompts. In Table 1, you will see an example zero-shot prompt to classify movie reviews.

The table format as used below is a great way of documenting prompts. Your prompts will likely go through many iterations before they end up in a codebase, so it’s important to keep track of your prompt engineering work in a disciplined, structured way. More on this table format, the importance of tracking prompt engineering work, and the prompt development process is in the Best Practices section later in this chapter (“Document the various prompt attempts”).

The model temperature should be set to a low number, since no creativity is needed, and we use the gemini-pro default top-K and top-P values, which effectively disable both settings (see ‘LLM Output Configuration’ above). Pay attention to the generated output. The words disturbing and masterpiece should make the prediction a little more complicated, as both words are used in the same sentence.

Table 1. An example of zero-shot prompting

| Name | 1_1_movie classification |

|---|---|

| Goal | Classify movie reviews as positive, neutral or negative. |

| Model | gemini-pro |

| Temperature | 0.1 |

| Token Limit | 5 |

| Top-k | NA |

| Top-P | 1 |

| Prompt | Classify movie reviews as POSITIVE, NEUTRAL or NEGATIVE. Review: "Her" is a disturbing study revealing the direction humanity is headed if AI is allowed to keep evolving, unchecked. I wish there were more movies like this masterpiece. Sentiment: |

| Output | POSITIVE |

When zero-shot doesn’t work, you can provide demonstrations or examples in the prompt, which leads to “one-shot” and “few-shot” prompting. General prompting / zero shot

One-shot & few-shot

When creating prompts for AI models, it is helpful to provide examples. These examples can help the model understand what you are asking for. Examples are especially useful when you want to steer the model to a certain output structure or pattern.

A one-shot prompt, provides a single example, hence the name one-shot. The idea is the model has an example it can imitate to best complete the task.

A few-shot prompt ⁷ provides multiple examples to the model. This approach shows the model a pattern that it needs to follow. The idea is similar to one-shot, but multiple examples of the desired pattern increases the chance the model follows the pattern.

The number of examples you need for few-shot prompting depends on a few factors, including the complexity of the task, the quality of the examples, and the capabilities of the generative AI (gen AI) model you are using. As a general rule of thumb, you should use at least three to five examples for few-shot prompting. However, you may need to use more examples for more complex tasks, or you may need to use fewer due to the input length limitation of your model.

Table 2 shows a few-shot prompt example, let’s use the same gemini-pro model configuration settings as before, other than increasing the token limit to accommodate the need for a longer response.

Table 2. An example of few-shot prompting

| 类型 | 内容 |

|---|---|

| Goal | Parse pizza orders to JSON |

| Model | gemini-pro |

| Temperature | 0.1 |

| Token Limit | 250 |

| Top-k | N/A |

| Top-P | 1 |

| Prompt | Parse a customer's pizza order into valid JSON: EXAMPLE: I want a small pizza with cheese, tomato sauce, and pepperoni. JSON Response: json<br>{ "ingredients": [["cheese", "tomato sauce", "peperoni"]] }<br>EXAMPLE: Can I get a large pizza with tomato sauce, basil and mozzarella JSON Response: json<br>{ "size": "large", "type": "normal", "ingredients": [["tomato sauce", "bazel", "mozzarella"]] }<br>Now, I would like a large pizza, with the first half cheese and mozzarella. And the other tomato sauce, ham and pineapple. JSON Response: |

| Output | json<br>{ <br> "size": "large", <br> "type": "half-half", <br> "ingredients": [<br> ["cheese", "mozzarella"], <br> ["tomato sauce", "ham", "pineapple"]<br> ] <br>}<br> |

When you choose examples for your prompt, use examples that are relevant to the task you want to perform. The examples should be diverse, of high quality, and well written. One small mistake can confuse the model and will result in undesired output.

If you are trying to generate output that is robust to a variety of inputs, then it is important to include edge cases in your examples. Edge cases are inputs that are unusual or unexpected, but that the model should still be able to handle.

System, contextual and role prompting

System, contextual and role prompting are all techniques used to guide how LLMs generate text, but they focus on different aspects:

- System prompting sets the overall context and purpose for the language model. It defines the ‘big picture’ of what the model should be doing, like translating a language, classifying a review etc.

- Contextual prompting provides specific details or background information relevant to the current conversation or task. It helps the model to understand the nuances of what's being asked and tailor the response accordingly.

- Role prompting assigns a specific character or identity for the language model to adopt. This helps the model generate responses that are consistent with the assigned role and its associated knowledge and behavior.

There can be considerable overlap between system, contextual, and role prompting. E.g. a prompt that assigns a role to the system, can also have a context.

However, each type of prompt serves a slightly different primary purpose:

- System prompt: Defines the model's fundamental capabilities and overarching purpose.

- Contextual prompt: Provides immediate, task-specific information to guide the response. It’s highly specific to the current task or input, which is dynamic.

- Role prompt: Frames the model's output style and voice. It adds a layer of specificity and personality.

Distinguishing between system, contextual, and role prompts provides a framework for designing prompts with clear intent, allowing for flexible combinations and making it easier to analyze how each prompt type influences the language model's output.

Let's dive into these three different kinds of prompts.

System prompting

Table 3 contains a system prompt, where I specify additional information on how to return the output. I increased the temperature to get a higher creativity level, and I specified a higher token limit. However, because of my clear instruction on how to return the output the model didn’t return extra text.

Table 3. An example of system prompting

| 类型 | 内容 |

|---|---|

| Goal | Classify movie reviews as positive, neutral or negative. |

| Model | gemini-pro |

| Temperature | 1 |

| Token Limit | 5 |

| Top-k | 40 |

| Top-P | 0.8 |

| Prompt | Classify movie reviews as positive, neutral or negative. Only return the label in uppercase. Review: “Her” is a disturbing study revealing the direction humanity is headed if AI is allowed to keep evolving, unchecked. It’s so disturbing I couldn't watch it. Sentiment: |

| Output | NEGATIVE |

System prompts can be useful for generating output that meets specific requirements. The name ‘system prompt’ actually stands for ‘providing an additional task to the system’. For example, you could use a system prompt to generate a code snippet that is compatible with a specific programming language, or you could use a system prompt to return a certain structure. Have a look into Table 4, where I return the output in JSON format.

Table 4. An example of system prompting with JSON format

| 类型 | 内容 |

|---|---|

| Goal | Classify movie reviews as positive, neutral or negative, return JSON. |

| Model | gemini-pro |

| Temperature | 1 |

| Token Limit | 1024 |

| Top-k | 40 |

| Top-P | 0.8 |

| Prompt | Classify movie reviews as positive, neutral or negative. Return valid JSON Review: “Her” is a disturbing study revealing the direction humanity is headed if AI is allowed to keep evolving, unchecked. It’s so disturbing I couldn't watch it. Schema: json<br>MOVIE: {<br> "sentiment": String // "POSITIVE" | "NEGATIVE" | "NEUTRAL",<br> "name": String<br>}<br>MOVIE REVIEWS: {<br> "movie_reviews": [Movie]<br>}<br>JSON Response: |

| Output | json<br>{<br> "movie_reviews": [<br> {<br> "sentiment": "NEGATIVE",<br> "name": "Her"<br> }<br> ]<br>}<br> (Example corrected based on context and schema, original output was incomplete/malformed) |

There are some benefits in returning JSON objects from a prompt that extracts data. In a real-world application I don’t need to manually create this JSON format, I can already return the data in a sorted order (very handy when working with datetime objects), but most importantly, by prompting for a JSON format it forces the model to create a structure and limit hallucinations.

System prompts can also be really useful for safety and toxicity. To control the output, simply add an additional line to your prompt like: “You should be respectful in your answer..”

Role prompting

Role prompting is a technique in prompt engineering that involves assigning a specific role to the gen AI model. This can help the model to generate more relevant and informative output, as the model can craft its responses to the specific role that it has been assigned.

For example, you could role prompt a gen AI model to be a book editor, a kindergarten teacher, or a motivational speaker. Once the model has been assigned a role, you can then give it prompts that are specific to that role. For example, you could prompt a teacher to create a lesson plan that they can then review. Have a look into Table 5, where the model takes the role of a travel guide.

Table 5. An example of role prompting

| 类型 | 内容 |

|---|---|

| Goal | Act as travel guide and provide 3 travel suggestions |

| Model | gemini-pro |

| Temperature | 1 |

| Token Limit | 1024 |

| Top-k | 40 |

| Top-P | 0.8 |

| Prompt | I want you to act as a travel guide. I will write to you about my location and you will suggest 3 places to visit near me. In some cases, I will also give you the type of places I will visit. My suggestion ‘I am in Amsterdam and I want to visit only museums. Travel Suggestions: |

| Output | 1. Rijksmuseum: World-renowned museum, known for Dutch masterpieces like Rembrandt's “The Night Watch.” Don't miss the incredible collection of paintings and artifacts showcasing Dutch art and history. 2. Van Gogh Museum: Immerse yourself in the captivating works of Vincent van Gogh. The museum houses the largest collection of his paintings and drawings, including “The Starry Night” and “Sunflowers.” 3. Stedelijk Museum Amsterdam: Discover modern and contemporary art from around the world. The museum's collection includes works by Picasso, Kandinsky, and Rietveld, housed in a striking modern building. |

The above example shows an example of taking the role of a travel agent. When you would change the role to a teacher of geography, you would notice that you will receive a different response.

Defining a role perspective for an AI model gives it a blueprint of the tone, style, and focused expertise you're looking for to improve the quality, relevance, and effectiveness of your output.

Here are some styles you can choose from which I find effective:

Confrontational, Descriptive, Direct, Formal, Humorous, Influential, Informal, Inspirational, Persuasive

Let's change our prompt in Table 6 to include a humorous and inspirational style.

Table 6. An example of role prompting with a humorous tone and style

| 类型 | 内容 |

|---|---|

| Prompt | I want you to act as a travel guide. I will write to you about my location and you will suggest 3 places to visit near me in a humorous style. My suggestion: "I am in Manhattan.” Travel Suggestions: |

| Output | 1. Behold the Empire State of Mind: Ascend to the dizzying heights of the Empire State Building and bask in the glory of Manhattan's skyline. Prepare to feel like King Kong atop the Big Apple, minus the giant ape-sized banana. 2. Get Artsy-Fartsy at MoMA: Unleash your inner art aficionado at the Museum of Modern Art (MoMA). Gaze upon masterpieces that will boggle your mind and make you question whether your stick-figure drawings have any artistic merit. 3. Shop ‘Til You Drop on Fifth Avenue: Indulge in some retail therapy on the iconic Fifth Avenue. Brace yourself for sticker shock as you window-shop (or actually shop) at designer boutiques that will make your wallet cry. But hey, you're in Manhattan, so you might as well embrace the fabulousness! |

Contextual prompting

By providing contextual prompts, you can help ensure that your AI interactions are as seamless and efficient as possible. The model will be able to more quickly understand your request and be able to generate more accurate and relevant responses, as you can see in the example of Table 7.

Table 7. An example of contextual prompting

| 类型 | 内容 |

|---|---|

| Goal | Suggest articles for a blog about retro games |

| Model | gemini-pro |

| Temperature | 1 |

| Token Limit | 1024 |

| Top-k | 40 |

| Top-P | 0.8 |

| Prompt | Context: You are writing for a blog about retro 80's arcade video games. Suggest 3 topics to write an article about with a few lines of description of what this article should contain. |

| Output | 1. The Evolution of Arcade Cabinet Design: This article would explore the evolution of arcade cabinet designs, from the early wood and metal cabinets of the 1970s to the sleek, neon-lit designs of the 1980s and beyond. 2. Blast From The Past: Iconic Arcade Games of The 80's: - This article would feature a glimpse into the history, impact, and legacy of some of the most iconic and influential arcade games of the 1980s, highlighting their innovations, popular mechanics, and enduring charm. 3. The Rise and Retro Revival of Pixel Art: ~ This article would delve into the evolution of pixel art as a defining visual style of early arcade games, tracing its roots, exploring its techniques, and discussing the resurgence of pixel art in modern games and digital art. |

Step-back prompting

Step-back⁸ prompting is a technique for improving the performance by prompting the LLM to first consider a general question related to the specific task at hand, and then feeding the answer to that general question into a subsequent prompt for the specific task. This ‘step back’ allows the LLM to activate relevant background knowledge and reasoning processes before attempting to solve the specific problem.

By considering the broader and underlying principles, LLMs can generate more accurate and insightful responses. Step-back prompting encourages LLMs to think critically and apply their knowledge in new and creative ways. It changes the final prompt doing the task by utilizing more knowledge in the LLM’s parameters than would otherwise come into play when the LLM is prompted directly.

It can help to mitigate biases in LLM responses, by focusing on general principles instead of specific details, step-back prompting.

Let's have a look into these examples to understand how step-back prompting can improve the results. Let’s first review a traditional prompt (Table 8) before we compare it to a step back prompt (Table 9):

Table 8. A traditional prompt before we compare it with a step back prompt

| Parameter | Value |

|---|---|

| Goal | Write a storyline for a level of a first-person shooter video game. |

| Model | gemini-pro |

| Temperature | 1 |

| Token Limit | 1024 |

| Top-k | 40 |

| Top-P | 0.8 |

| Prompt | Write a one paragraph storyline for a new level of a first-person shooter video game that is challenging and engaging. |

| Output | The level begins with the player’s squad being ambushed in a dense urban area by a relentless enemy faction. Players must fight their way through a maze of back alleys and dilapidated buildings, utilizing stealth and precision to eliminate enemy forces and avoid detection. The player must use their environment to their advantage, setting traps, utilizing cover, and making calculated moves to survive. Along the way, they uncover intel suggesting the enemy is planning a devastating attack, and the player must race against time to gather evidence and relay it back to their headquarters before the enemy can carry out their plans. |

When you set the temperature to 1, you might end up with all kinds of creative writings for a storyline, but it’s also quite random and generic. So let's take a step back:

Table 9. An example of prompting for self consistency (Step-back initial query)

| 类型 | 内容 |

|---|---|

| Prompt | Based on popular first-person shooter action games, what are 5 fictional key settings that contribute to a challenging and engaging level storyline in a first-person shooter video game? |

| Output | 1. Abandoned Military Base: A sprawling, post-apocalyptic military complex crawling with mutated soldiers and rogue robots, ideal for challenging firearm combat. 2. Cyberpunk City: A neon-lit, futuristic urban environment with towering skyscrapers and dense alleyways, featuring cybernetically enhanced enemies and hacking mechanics. 3. Alien Spaceship: A vast alien vessel stranded on Earth, with eerie corridors, zero-gravity sections, and extraterrestrial creatures to encounter. 4. Zombie-Infested Town: A desolate town overrun by hordes of aggressive zombies, featuring intense close-quarters combat and puzzle-solving to find safe passage. 5. Underwater Research Facility: A deep-sea laboratory flooded with water, filled with mutated aquatic creatures, and requiring stealth and underwater exploration skills to survive. |

Yeah those topics seem like a good fit for a first-person video game. Let’s go back to the original prompt, but this time we include the answer of the step back as context and see what it will return.

Table 10. An example of prompting for self consistency (Applying step-back context)

| 类型 | 内容 |

|---|---|

| Prompt | Context: 5 engaging themes for a first person shooter video game: 1. Abandoned Military Base: A sprawling, post-apocalyptic military complex crawling with mutated soldiers and rogue robots, ideal for challenging firearm combat. 2. Cyberpunk City: A neon-lit, futuristic urban environment with towering skyscrapers and dense alleyways, featuring cybernetically enhanced enemies and hacking mechanics. 3. Alien Spaceship: A vast alien vessel stranded on Earth, with eerie corridors, zero-gravity sections, and extraterrestrial creatures to encounter. 4. Zombie-Infested Town: A desolate town overrun by hordes of aggressive zombies, featuring intense close-quarters combat and puzzle-solving to find safe passage. 5. Underwater Research Facility: A deep-sea laboratory flooded with water, filled with mutated aquatic creatures, and requiring stealth and underwater exploration skills to survive. Take one of the themes and write a one paragraph storyline for a new level of a first-person shooter video game that is challenging and engaging. |

| Output | In the heart of a murky abyss, lies a dilapidated underwater research facility, standing as a testament to human ambition and its disastrous consequences. Shrouded in darkness, pulsating with the hum of malfunctioning machinery, and haunted by the echoes of drowned souls, the facility is now a breeding ground for a nightmare borne from the deep sea. The player, an elite marine equipped with advanced diving gear and experimental weaponry, is tasked with venturing into this aquatic realm of terror. Armed with resilience and resourcefulness, they must navigate treacherous corridors, solve cryptic puzzles, and confront gruesome sea monstrosities that lurk in the depths. From grappling with the crushing pressure of the deep sea to outsmarting cunning aquatic predators, every moment in this uncharted underworld tests the limits of human endurance and courage. |

That looks like an interesting video game! By using step back prompting techniques you can increase the accuracy of your prompts.

Chain of Thought (CoT)

Chain of Thought (CoT)⁹ prompting is a technique for improving the reasoning capabilities of LLMs by generating intermediate reasoning steps. This helps the LLM generate more accurate answers. You can combine it with few-shot prompting to get better results on more complex tasks that require reasoning before responding as it’s a challenge with a zero-shot chain of thought.

CoT has a lot of advantages. First of all, it’s low-effort while being very effective and works well with off-the-shelf LLMs (so no need to finetune). You also get interpretability with CoT prompting, as you can learn from the LLM’s responses and see the reasoning steps that were followed. if there’s a malfunction, you will be able to identify it. Chain of thought appears to improve robustness when moving between different LLM versions. Which means the performance of your prompt should drift less between different LLMs than if your prompt does not use reasoning chains. Of course there are also disadvantages, but they are somewhat intuitive.

The LLM response includes the chain of thought reasoning, which means more output tokens, which means predictions cost more money and take longer.

To explain the following example in Table 11, let's first try to create a prompt that is not using CoT prompting to showcase the flaws of a large language model.

Table 11. An example of a prompt which is trying to solve a mathematical problem

| Prompt | Output |

|---|---|

| When I was 3 years old, my partner was 3 times my age. Now, I am 20 years old. How old is my partner? | 63 years old |

Yikes. That's obviously the wrong answer. As a matter of fact, LLMs often struggle with mathematical tasks and can provide incorrect answers — even for a task as simple as multiplying two numbers. This is because they are trained on large volumes of text and math may require a different approach. So let’s see if intermediate reasoning steps will improve the output.

Table 12. An example of Chain of Thought prompting

| Prompt | Output |

|---|---|

| When I was 3 years old, my partner was 3 times my age. Now, I am 20 years old. How old is my partner? Let's think step by step. | 1. Let’s assume my current age is “x” years. 2. When I was 3 years old, my age was 3 years. 3. My partner's age at that time was 3 times my age, which means my partner's age was 3×3=9 years. 4. Now, I am 20 years old, which means my age has increased by 20−3=17 years since I was 3 years old. 5. Since my partner's age was 9 years when I was 3 years old, and my age has increased by 17 years, my partner’s age must have also increased by 17 years. 6. Therefore, my partner's current age is 9+17=26 years. So, my partner is 26 years old. |

Alright, now the final answer is correct. That's because we clearly instructed the LLM to explain each step instead of just returning an answer. It’s interesting to see that the model adds up the 17 increased years. In my head I would take the difference of years between my partner and me and add those up. (20+(9-3)). Let’s help the model to think a little bit more like me.

Table 12 is an example of ‘zero-shot’ Chain of thought. Chain of thought prompting can be very powerful when combined with a single-shot or few-shot, as what you can see in Table 13:

Table 13. An example of chain of thought prompting with a single-shot

| Prompt | Output |

|---|---|

| Q: When my brother was 2 years old, I was double his age. Now I am 49 years old. How old is my brother? Let's think step by step. A: When my brother was 2 years, I was 2 × 2 = 4 years old. That's an age difference of 2 years and I am older. Now I am 49 years old, so my brother is 49 − 2 = 47 years old. The answer is 47. Q: When I was 3 years old, my partner was 3 times my age. Now, I am 20 years old. How old is my partner? Let's think step by step. | When I was 3 years old, my partner was 3 × 3 = 9 years old. That's an age difference of 6 years and my partner is older. Now I am 20 years old, so my partner is 20 + 6 = 26 years old. The answer is 26. |

Chain of thought can be useful for various use-cases. Think of code generation, for breaking down the request into a few steps, and mapping those to specific lines of code. Or for creating synthetic data when you have some kind of seed like “The product is called XYZ, write a description guiding the model through the assumptions you would make based on the product given title.” Generally, any task that can be solved by ‘talking through is a good candidate for a chain of thought. If you can explain the steps to solve the problem, try chain of thought.

Please refer to the notebook¹⁰ hosted in the GoogleCloudPlatform Github repository which will go into further detail on CoT prompting:

In the best practices section of this chapter, we will learn some best practices specific to Chain of thought prompting.

Self-consistency

While large language models have shown impressive success in various NLP tasks, their ability to reason is often seen as a limitation that cannot be overcome solely by increasing model size. As we learned in the previous Chain of Thought prompting section, the model can be prompted to generate reasoning steps like a human solving a problem. However CoT uses a simple ‘greedy decoding’ strategy, limiting its effectiveness. Self-consistency¹¹ combines sampling and majority voting to generate diverse reasoning paths and select the most consistent answer. It improves the accuracy and coherence of responses generated by LLMs.

Self-consistency gives a pseudo-probability likelihood of an answer being correct, but obviously has high costs.

It follows the following steps:

- Generating diverse reasoning paths: The LLM is provided with the same prompt multiple times. A high temperature setting encourages the model to generate different reasoning paths and perspectives on the problem.

- Extract the answer from each generated response.

- Choose the most common answer.

Let's look into an example of an email classification system, which classifies an email as IMPORTANT or NOT IMPORTANT. A zero-shot chain of thought prompt will be sent to the LLM multiple times, to see if the responses differ after each submit. Notice the friendly tone, the word choice and the sarcasm that’s been used in the email. All this could trick the LLM.

(Table 14: Self-consistency Example - Multiple Attempts)

Attempt 1

| Section/Step | Content / Description |

|---|---|

| Prompt Email | Hi, I have seen you use Wordpress for your website. A great open source content management system. I have used it in the past too. It comes with lots of great user plugins. And it's pretty easy to set up. I did notice a bug in the contact form, which happens when you select the name field. See the attached screenshot of me entering text in the name field. Notice the JavaScript alert box that I invoked. But for the rest it's a great website. I enjoy reading it. Feel free to leave the bug in the website, because it gives me more interesting things to read. Cheers, Harry the Hacker. |

| Step 1: Identify the purpose of the email | The purpose of the email is to inform the recipient of a bug in the contact form on their website. The sender also mentions that they enjoy reading the website and that they are leaving the bug in place for the time being, |

| Step 2: Assess the potential impact of the bug | The bug in the contact form could allow an attacker to execute arbitrary JavaScript code on the website. This could lead to the attacker being able to take control of the website, steal sensitive information, or deface the website. |

| Step 3: Consider the credibility of the sender. | The sender of the email claims to be a hacker named Harry. However, there is no way to verify the sender's identity. It is possible that the sender is a legitimate hacker who is trying to help the recipient, but it is also possible that the sender is a malicious actor who is trying to trick the recipient into clicking on a malicious link or downloading a malicious file. |

| Conclusion: IMPORTANT | Based on the potential impact of the bug and the credibility of the sender, the email should be classified as IMPORTANT. The recipient should take immediate action to fix the bug and protect their website from attack. |

Attempt 2

| Section / Explanation Point | Content / Description |

|---|---|

| Prompt Email | Hi, I have seen you use Wordpress for your website. A great open source content management system. I have used it in the past too. It comes with lots of great user plugins. And it's pretty easy to set up. I did notice a bug in the contact form, which happens when you select the name field. See the attached screenshot of me entering text in the name field. Notice the JavaScript alert box that I invoked. But for the rest it's a great website. I enjoy reading it. Feel free to leave the bug in the website, because it gives me more interesting things to read. Cheers, Harry the Hacker. |

| 1. Lack of urgency: | The email does not convey any sense of urgency or time-sensitivity. It starts with a casual greeting and presents the information in a matter-of-fact tone. |

| 2. Non-critical bug report: | The bug reported in the contact form is described as interesting but not critical. The email sender even suggests that the website owner can leave the bug in place. |

| 3. Lack of personal impact: | The email does not indicate any direct or personal impact on the website owner. It simply mentions that the sender enjoys reading the website. |

| 4. Absence of action request: | The email does not explicitly request any action from the website owner. It does not ask for the bug to be fixed or provide any specific suggestions for improvement. |

| 5. Sender’s intent: | The sender's intention seems to be more focused on sharing an observation and expressing interest in the website rather than raising a serious concern or requesting assistance. |

| Conclusion: NOT IMPORTANT |

Attempt 3

| Section/Step | Content / Description |

|---|---|

| Prompt Email | Hi, I have seen you use Wordpress for your website. A great open source content management system. I have used it in the past too. It comes with lots of great user plugins. And it's pretty easy to set up. I did notice a bug in the contact form, which happens when you select the name field. See the attached screenshot of me entering text in the name field. Notice the JavaScript alert box that I invoked. But for the rest it's a great website. I enjoy reading it. Feel free to leave the bug in the website, because it gives me more interesting things to read. Cheers, Harry the Hacker. |

| Step 1: Identify the purpose of the email. | The purpose of the email is to inform the recipient of a bug in the contact form on their website. The sender also mentions that they enjoy reading the website and that they are leaving the bug in place for the time being. |

| Step 2: Assess the potential impact of the bug. | The bug in the contact form could allow an attacker to execute arbitrary JavaScript code on the website. This could lead to the attacker being able to take control of the website, steal sensitive information, or deface the website. |

| Step 3: Consider the credibility of the sender. | The sender of the email claims to be a hacker named Harry. However, there is no way to verify the sender's identity. It is possible that the sender is a legitimate hacker who is trying to help the recipient, but it is also possible that the sender is a malicious actor who is trying to exploit the bug for their own gain, |

| Conclusion | Based on the above factors, the email should be classified as IMPORTANT. The bug in the contact form is a serious security risk, and the sender's credibility is unknown. The recipient should take immediate action to fix the bug and |

You can use the above prompt and try to see if it returns a consistent classification. Depending on the model that you are using and the temperature configuration, it might return “IMPORTANT” or “NOT IMPORTANT”.

By generating many Chains of Thoughts, and taking the most commonly occurring answer (“IMPORTANT”), we can get a more consistently correct answer from the LLM.

This example shows how self-consistency prompting can be used to improve the accuracy of an LLM’s response by considering multiple perspectives and selecting the most consistent answer.

Tree of Thoughts (ToT)

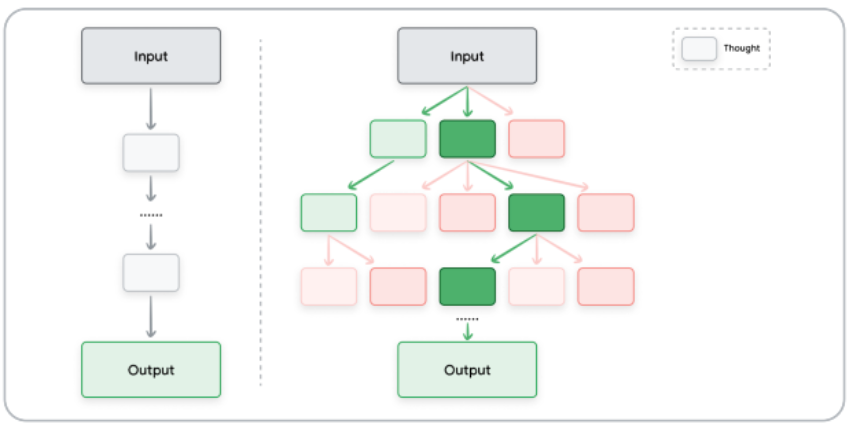

Now that we are familiar with chain of thought and self-consistency prompting, let’s review Tree of Thoughts (ToT).¹² It generalizes the concept of CoT prompting because it allows LLMs to explore multiple different reasoning paths simultaneously, rather than just following a single linear chain of thought. This is depicted in Figure 1.

Figure 1. A visualization of chain of thought prompting on the left versus. Tree of Thoughts prompting on the right

This approach makes ToT particularly well-suited for complex tasks that require exploration. It works by maintaining a tree of thoughts, where each thought represents a coherent language sequence that serves as an intermediate step toward solving a problem. The model can then explore different reasoning paths by branching out from different nodes in the tree.

There's a great notebook, which goes into a bit more detail showing The Tree of Thought (ToT) which is based on the paper ‘Large Language Model Guided Tree-of-Thought’¹²

ReAct (reason & act)

Reason and act (ReAct) [¹³] prompting is a paradigm for enabling LLMs to solve complex tasks using natural language reasoning combined with external tools (search, code interpreter etc.) allowing the LLM to perform certain actions, such as interacting with external APIs to retrieve information which is a first step towards agent modeling.

ReAct mimics how humans operate in the real world, as we reason verbally and can take actions to gain information. ReAct performs well against other prompt engineering approaches in a variety of domains.

ReAct prompting works by combining reasoning and acting into a thought-action loop. The LLM first reasons about the problem and generates a plan of action. It then performs the actions in the plan and observes the results. The LLM then uses the observations to update its reasoning and generate a new plan of action. This process continues until the LLM reaches a solution to the problem.

To see this in action, you need to write some code. In Snippet 1 I am using the langchain framework for Python, together with VertexAI (google-cloud-aiplatform) and the google-search-results pip packages.

To run this sample you must create a (free) SerpAPI key from https://serpapi.com/manage-api-key and set an environment variable SERPAPI_API_KEY.

Next let’s write some Python code, with the task for the LLM to figure out: How many children have a famous dad that performs in the band Metallica.

Snippet 1. Creating a ReAct Agent with LangChain and VertexAI

from langchain.agents import load_tools from langchain.agents import initialize_agent from langchain.agents import AgentType from langchain.llms import VertexAI prompt = "How many kids do the band members of Metallica have?" llm = VertexAI(temperature=0.1) tools = load_tools(["serpapi"], llm=llm) agent = initialize_agent(tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True) agent.run(prompt)

Snippet 2 shows the result. Notice that ReAct makes a chain of five searches. In fact, the LLM is scraping Google search results to figure out the band names. Then, it lists the results as observations and chains the thought for the next search.

Snippet 2. Executing ReAct Agent in the terminal

> Entering new AgentExecutor chain...

Thought: I need to find out the names of the band members of Metallica and then search for how many kids each member has.

Action: Search

Action Input: Metallica band members

Observation: James Hetfield, Lars Ulrich, Kirk Hammett, Robert Trujillo

Thought: Metallica has 4 members.

Action: Search

Action Input: How many kids does James Hetfield have?

Observation: three children

Thought: 1/4 Metallica band members have 3 children

Action: Search

Action Input: How many kids does Lars Ulrich have?

Observation: 3

Thought: 2/4 Metallica band members have 3 + 3 = 6 children

Action: Search

Action Input: How many kids does Kirk Hammett have?

Observation: Hammett has been married to his second wife Lani since 1998. They have two sons, Angel (b. September 29, 2006) and Vincenzo (b. June 28, 2008).

Thought: Kirk Hammett has 2 children. 3/4 Metallica band members have 6 + 2 = 8 children

Action: Search

Action Input: How many kids does Robert Trujillo have?

Observation: 2

Thought: Robert Trujillo has 2 children. 4/4 Metallica band members have 8 + 2 = 10 children

Final Answer: 10

Snippet 2 figures out that the band Metallica has four band members. Then it searches each band member to request the total of children and add up the total. Finally, it returns the total number of children as the final answer.

ReAct prompting in practice requires understanding that you continually have to resend the previous prompts/responses (and do trimming of the extra generated content) as well as set up the model with appropriate examples/instructions. Please refer to the notebook¹⁴ hosted in the GoogleCloudPlatform Github repository, which goes into a bit more detail showing the actual LLM inputs and outputs with a more elaborate example.

Automatic Prompt Engineering (APE)

At this point you might realize that writing a prompt can be complex. Wouldn't it be nice to automate this (write a prompt to write prompts)? Well, there's a method: Automatic Prompt Engineering (APE).¹⁵ This method not only alleviates the need for human input but also enhances the model's performance in various tasks.

You will prompt a model to generate more prompts. Evaluate them, possibly alter the good ones. And repeat.

For example, you can use automatic prompt engineering that will help with training a chatbot for a merchandise t-shirt webshop. We want to figure out all the various ways customers could phrase their order for buying a band merchandise t-shirt.

- Write the prompt which will generate the output variants. In this example, I am using

gemini-proto generate 10 instructions. See Table 15:

Table 15. Use an LLM to generate prompts

| Section | Content |

|---|---|

| Prompt | We have a band merchandise t-shirt webshop, and to train a chatbot we need various ways to order: "One Metallica t-shirt size S". Generate 10 variants, with the same semantics but keep the same meaning. |

| Output | 1. I'd like to purchase a Metallica t-shirt in size small. 2. Can I order a small-sized Metallica t-shirt? 3. I'd like to buy one Metallica t-shirt in small. 4. One Metallica shirt, size small, please. 5. I want one Metallica t-shirt in size small. 6. I'd like to place an order for one Metallica t-shirt in small. 7. Small Metallica t-shirt, one please. 8. I'm looking for one Metallica t-shirt in small 9. Can you get me one Metallica t-shirt in small? 10. One Metallica small t-shirt, please. |

- Evaluate all of the instruction candidates by scoring the candidates based on a chosen metric. For example, you can use BLEU (Bilingual Evaluation Understudy) or ROUGE (Recall-Oriented Understudy for Gisting Evaluation).

- Select the instruction candidate with the highest evaluation score. This candidate will be the final prompt you can use in your software application or chatbot. You can also tweak the selected prompt and evaluate again.

Code prompting

Gemini focuses primarily on text-based prompts, which also includes writing prompts for returning code. Let’s go to the Vertex AI Studio and test these prompts to look at some coding examples.

Prompts for writing code

Gemini can also be a developer and help you with writing code in any programming language of choice. As a developer this can help you speed up the process of writing code.

Imagine a folder on your machine with hundreds of files that needs to be renamed. Renaming each file would take you a lot of time. You know a little Bash, and could write a script to automate this, but that may also take awhile. So let’s write a prompt. You can write the prompt in the public consumer chatbot Gemini, or if you are more concerned about confidentiality, you can write these prompts within your Google Cloud account and open Vertex AI Studio. The advantage of Vertex AI Studio is that you can configure the temperature etc.

Table 16. Using Gemini to program code

| Field | Value |

|---|---|

| Name | bash_rename_files_in_folder |

| Goal | Write a prompt to write code in Bash to rename files in a folder. |

| Model | gemini-pro |

| Temperature | 0.4 |

| Token Limit | 1024 |

| Top-k | N/A |

| Top-P | 1 |

| Prompt | Write a code snippet in Bash, which asks for a folder name. Then it takes the contents of the folder and renames all the files inside by prepending the name draft to the file name. |

| Output | bash<br>#!/bin/bash<br><br># Ask for the folder name<br>echo "Enter the folder name:"<br>read folder_name<br><br># Check if the folder exists<br>if [ ! -d "$folder_name" ]; then<br> echo "Folder does not exist."<br> exit 1<br>fi<br><br># Get the list of files in the folder<br>files=( "$folder_name"/* )<br><br># Rename each file by prepending “draft_” to the file name<br>for file in "${files[@]}"; do<br> # Check if it is a file before renaming<br> if [ -f "$file" ]; then<br> new_file_name="draft_$(basename "$file")"<br> mv "$file" "$folder_name/$new_file_name" # Corrected mv command target directory<br> fi<br>done<br><br>echo "Files renamed successfully.”<br> |

That seems like good code to me - it’s even documented! However, since LLMs can’t reason, and repeat training data, it’s essential to read and test your code first.

The moment we are all waiting for, does it really work?

Let's try it first with a test folder with only a few files in it, which will need to be renamed from filename.txt to draft_filename.txt.

- Copy the output from Table 16 (without the

bash ...text wrapper), and paste it in a new file called:rename_files.sh. - Open a terminal window and type:

. rename_files.sh. It will ask to enter a folder name, e.g.test, and hit enter. - The script seems to run fine. You will see the message: Files renamed successfully. When you look into the test folder, notice that all the files were perfectly renamed to

draft_filename.txt.

It worked!

Prompts for explaining code

As a developer when you work in teams you have to read someone else’s code. Gemini can help you with this as well. Let’s take the code output from Table 16, remove the comments and ask the large language model to explain what’s happening, See Table 17:

Table 17. A prompt for explaining code

| Field | Value |

|---|---|

| Name | bash_explain_the_code |

| Goal | Write prompt to explain Bash code |

| Model | gemini-pro |

| Temperature | 0.4 |

| Token Limit | 1024 |

| Top-k | N/A |

| Top-P | 1 |

| Prompt | Explain to me the below Bash code:bash<br>#!/bin/bash<br><br>echo "Enter the folder name:"<br>read folder_name<br><br>if [ ! -d "$folder_name" ]; then<br> echo "Folder does not exist."<br> exit 1<br>fi<br><br>files=( "$folder_name"/* )<br><br>for file in "${files[@]}"; do<br> if [ -f "$file" ]; then<br> new_file_name="draft_$(basename "$file")"<br> mv "$file" "$folder_name/$new_file_name"<br> fi<br>done<br><br>echo "Files renamed successfully."<br> |

| Output | This Bash script performs the following tasks: 1. User Input: - It prompts the user to enter a folder name using the echo and read commands. The entered folder name is stored in the folder_name variable.2. Folder Existence Check: - It checks if the folder with the specified name exists using the [ ! -d "$folder_name" ] condition. If the folder does not exist, it displays an error message (“Folder does not exist.”) and exits the script with an exit code of 1.3. File Listing: - It lists all the items (files and directories) inside the specified folder using the files=( "$folder_name"/* ) command. This creates an array called files containing the paths to all items in the folder.4. File Renaming: - It iterates through each item in the files array using a for loop.- It checks if the item is a regular file using [ -f "$file" ].- If it's a file, it generates a new file name using new_file_name="draft_$(basename "$file")". This new file name is created by prefixing the original file name (without the path) with the string “draft_”.- It then renames the file using the mv "$file" "$folder_name/$new_file_name" command, which moves the file to the new file name within the same folder.5. Success Message - After renaming all the files, it displays a success message (“Files renamed successfully.”) to indicate that the renaming operation was successful. |

Prompts for translating code

The bash code from Table 16 seems to work fine. However this script could really be reusable when it would prompt me for file names, ideally it should work as a separate application with an UI. As a starting point, Python would be a better language for a (web) application than Bash. LLMs can help with translating code from one language to another. See the example in Table 18:

Table 18. A prompt for translating code from Bash to Python

| Field | Value |

|---|---|

| Name | _python_rename_files |

| Goal | Write a prompt to translate Bash code to Python |

| Model | gemini-pro |

| Temperature | 0.4 |

| Token Limit | 1024 |

| Top-k | N/A |

| Top-P | 1 |

| Prompt | Translate the below Bash code to a Python snippet.bash<br>#!/bin/bash<br><br>echo "Enter the folder name:"<br>read folder_name<br><br>if [ ! -d "$folder_name" ]; then<br> echo "Folder does not exist."<br> exit 1<br>fi<br><br>files=( "$folder_name"/* )<br><br>for file in "${files[@]}"; do<br> if [ -f "$file" ]; then<br> new_file_name="draft_$(basename "$file")"<br> mv "$file" "$folder_name/$new_file_name"<br> fi<br>done<br><br>echo "Files renamed successfully."<br> |

| Output | python<br>import os<br>import shutil<br><br># Get the folder name from the user<br>folder_name = input("Enter the folder name: ")<br><br># Check if the folder exists<br>if not os.path.isdir(folder_name):<br> print("Folder does not exist.")<br> exit(1)<br><br># Get a list of all items in the folder<br>items = os.listdir(folder_name)<br><br># Iterate over the items<br>for item in items:<br> # Construct the full path<br> old_path = os.path.join(folder_name, item)<br><br> # Check if it's actually a file before renaming<br> if os.path.isfile(old_path):<br> # Create a new file name with the prefix "draft_"<br> new_file_name = f"draft_{item}"<br> new_path = os.path.join(folder_name, new_file_name)<br><br> # Move the file to the new name<br> shutil.move(old_path, new_path)<br><br># Print a success message<br>print("Files renamed successfully.")<br> |

Read and review the code. Copy the output from the prompt and paste it into a new file: file_renamer.py. Test the code by opening a Terminal window, and execute the following command python file_renamer.py.

NOTE: When prompting for (Python) code in the Language Studio in Vertex AI, you will have to click on the ‘Markdown’ button. Otherwise you will receive plain text which is missing the proper indenting of lines, which is important for running Python code.

Prompts for debugging and reviewing code

Let’s manually write some edits to the code of Table 18. It should prompt the user for the filename prefix, and write this prefix in upper case characters. See the example code in Snippet 3, but what a bummer. It now returns Python errors!

Snippet 3. A broken Python script

import os import shutil folder_name = input("Enter the folder name: ") prefix = input("Enter the string to prepend to the filename: ") text = toUpperCase(prefix) # Problematic line if not os.path.isdir(folder_name): # Indentation seems off in original snippet, correcting print("Folder does not exist.") exit(1) files = os.listdir(folder_name) for file in files: # Construct the full path for checking if it's a file old_path = os.path.join(folder_name, file) if os.path.isfile(old_path): new_filename = f"{text}_{file}" # Using the uppercased prefix new_path = os.path.join(folder_name, new_filename) shutil.move(old_path, new_path) # Indentation seems off in original, correcting print("Files renamed successfully.")

Doh! That looks like a bug:

Snippet 4. I broke the Python code

Traceback (most recent call last):

File "/Users/leeboonstra/Documents/test_folder/rename_files.py", line 7, in <module>

text = toUpperCase(prefix)

NameError: name 'toUpperCase' is not defined

Let's see if we can ask the large language model to debug and review code. Have a look into Table 19:

Table 19. A prompt for debugging and reviewing Python code

| Section | Content |

|---|---|

| Name | _python_debug_code |

| Goal | Write a prompt to debug and review Python code. |

| Model | gemini-pro |

| Temperature | 0.4 |

| Token Limit | 1024 |

| Top-k | N/A |

| Top-P | 1 |

| Prompt | The below Python code gives an error:Traceback (most recent call last):File "/Users/leeboonstra/Documents/test_folder/rename_files.py", line 7, in <module>text = toUpperCase(prefix)NameError: name 'toUpperCase' is not definedDebug what's wrong and explain how I can improve the code. python<br>import os<br>import shutil<br><br>folder_name = input("Enter the folder name: ")<br>prefix = input("Enter the string to prepend to the filename: ")<br>text = toUpperCase(prefix)<br><br>if not os.path.isdir(folder_name):<br> print("Folder does not exist.")<br> exit(1)<br><br>files = os.listdir(folder_name)<br><br>for file in files:<br> old_path = os.path.join(folder_name, file)<br> if os.path.isfile(old_path):<br> new_filename = f"{text}_{file}"<br> new_path = os.path.join(folder_name, new_filename)<br> shutil.move(old_path, new_path)<br><br>print("Files renamed successfully.")<br> |

| Output | The error NameError: name 'toUpperCase' is not defined occurs because Python does not have a built-in function named toUpperCase. The standard way to convert a string to uppercase in Python is to use the .upper() method of the string object.To fix this issue, you should change the line: text = toUpperCase(prefix)to: text = prefix.upper()Here's the corrected code: python<br>import os<br>import shutil<br><br># Get the folder name from the user<br>folder_name = input("Enter the folder name: ")<br>prefix = input("Enter the string to prepend to the filename: ")<br>text = prefix.upper() # Corrected line<br><br># Check if the folder exists<br>if not os.path.isdir(folder_name):<br> print("Folder does not exist.")<br> exit(1)<br><br># Get a list of all items in the folder<br>files = os.listdir(folder_name)<br><br># Iterate over the items<br>for file in files:<br> # Construct the full path<br> old_path = os.path.join(folder_name, file)<br> # Check if it's actually a file before renaming<br> if os.path.isfile(old_path):<br> # Create a new file name with the uppercase prefix<br> new_filename = f"{text}_{file}"<br> new_path = os.path.join(folder_name, new_filename)<br> # Move the file to the new name<br> shutil.move(old_path, new_path)<br><br># Print a success message<br>print("Files renamed successfully.")<br>Additionally, here are a few improvements that can be made to the code for robustness and clarity: 1. Error Handling for Renaming: The shutil.move operation could fail (e.g., due to permissions issues or if a file with the new name already exists). It's good practice to wrap this in a try-except block.2. Clarity on Success Message: The success message prints after the loop finishes, even if some files failed to rename. It might be better to print a message for each successful rename or summarize successes/failures at the end. 3. Handling Empty Prefix: Consider what should happen if the user enters an empty prefix. 4. Checking for target file existence: You might want to check if new_path already exists before attempting the move to avoid overwriting files unintentionally, or handle it explicitly.Here is an example incorporating error handling: python<br>import os<br>import shutil<br><br># Get the folder name from the user<br>folder_name = input("Enter the folder name: ")<br>prefix = input("Enter the string to prepend to the filename: ")<br>text = prefix.upper()<br><br># Check if the folder exists<br>if not os.path.isdir(folder_name):<br> print(f"Error: Folder '{folder_name}' does not exist.")<br> exit(1)<br><br>print(f"Renaming files in '{folder_name}' with prefix '{text}_'...")<br>success_count = 0<br>fail_count = 0<br><br># Get a list of all items in the folder<br>try:<br> files = os.listdir(folder_name)<br>except OSError as e:<br> print(f"Error accessing folder contents: {e}")<br> exit(1)<br><br># Iterate over the items<br>for file in files:<br> old_path = os.path.join(folder_name, file)<br><br> # Check if it's a file<br> if os.path.isfile(old_path):<br> # Create a new file name<br> new_filename = f"{text}_{file}"<br> new_path = os.path.join(folder_name, new_filename)<br><br> # Try to move the file<br> try:<br> # Optional: Check if target exists<br> # if os.path.exists(new_path):<br> # print(f"Warning: Target file '{new_filename}' already exists. Skipping '{file}'.")<br> # fail_count += 1<br> # continue <br> shutil.move(old_path, new_path)<br> # print(f"Renamed '{file}' to '{new_filename}'") # Optional: report each success<br> success_count += 1<br> except Exception as e:<br> print(f"Error renaming file '{file}': {e}")<br> fail_count += 1<br><br># Print a summary message<br>print(f"\nRenaming complete. Successfully renamed {success_count} files.")<br>if fail_count > 0:<br> print(f"{fail_count} files failed to rename or were skipped.")<br>These improvements make the code more robust and provide better feedback to the user. |

That's great. Not only did it tell me how to solve the problem, it also figured out that my code had more potential issues and how to solve them, too. The last part of the prompt gave suggestions to improve the code in general.

What about multimodal prompting?

Prompting for code still uses the same regular large language model. Multimodal prompting is a separate concern, it refers to a technique where you use multiple input formats to guide a large language model, instead of just relying on text. This can include combinations of text, images, audio, code, or even other formats, depending on the model's capabilities and the task at hand.

Best Practices

Finding the right prompt requires tinkering. Language Studio in Vertex AI is a perfect place to play around with your prompts, with the ability to test against the various models.

Use the following best practices to become a pro in prompt engineering.

Provide examples

The most important best practice is to provide (one shot / few shot) examples within a prompt. This is highly effective because it acts as a powerful teaching tool. These examples showcase desired outputs or similar responses, allowing the model to learn from them and tailor its own generation accordingly. It’s like giving the model a reference point or target to aim for, improving the accuracy, style, and tone of its response to better match your expectations.

Be concise

Prompts should be concise, clear, and easy to understand for both you and the model. As a rule of thumb, if it’s already confusing for you it will likely be also confusing for the model. Try not to use complex language and don’t provide unnecessary information.

Examples:

BEFORE:

I am visiting New York right now, and I'd like to hear more about great locations. I am with two 3 year old kids. Where should we go during our vacation?

AFTER REWRITE:

Act as a travel guide for tourists. Describe great places to visit in New York Manhattan with a 3 year old.

Try using verbs that describe the action. Here's a set of examples:

Act, Analyze, Categorize, Classify, Contrast, Compare, Create, Describe, Define, Evaluate, Extract, Find, Generate, Identify, List, Measure, Organize, Parse, Pick, Predict, Provide, Rank, Recommend, Return, Retrieve, Rewrite, Select, Show, Sort, Summarize, Translate, Write.

Be specific about the output

Be specific about the desired output. A concise instruction might not guide the LLM enough or could be too generic. Providing specific details in the prompt (through system or context prompting) can help the model to focus on what's relevant, improving the overall accuracy.

Examples:

Do:

Generate a 3 paragraph blog post about the top 5 video game consoles. The blog post should be informative and engaging, and it should be written in a conversational style.

DO NOT:

Generate a blog post about video game consoles.

Use Instructions over Constraints

Instructions and constraints are used in prompting to guide the output of a LLM.

- An instruction provides explicit instructions on the desired format, style, or content of the response. It guides the model on what the model should do or produce.

- A constraint is a set of limitations or boundaries on the response. It limits what the model should not do or avoid.

Growing research suggests that focusing on positive instructions in prompting can be more effective than relying heavily on constraints. This approach aligns with how humans prefer positive instructions over lists of what not to do.

Instructions directly communicate the desired outcome, whereas constraints might leave the model guessing about what is allowed. It gives flexibility and encourages creativity within the defined boundaries, while constraints can limit the model's potential. Also a list of constraints can clash with each other.

Constraints are still valuable but in certain situations. To prevent the model from generating harmful or biased content or when a strict output format or style is needed.

If possible, use positive instructions: instead of telling the model what not to do, tell it what to do instead. This can avoid confusion and improve the accuracy of the output.

Do:

Generate a 1 paragraph blog post about the top 5 video game consoles. Only discuss the console, the company who made it, the year, and total sales.

DO NOT:

Generate a 1 paragraph blog post about the top 5 video game consoles. Do not list video game names.

As a best practice, start by prioritizing instructions, clearly stating what you want the model to do and only use constraints when necessary for safety, clarity or specific requirements. Experiment and iterate to test different combinations of instructions and constraints to find what works best for your specific tasks, and document these.

Control the max token length

To control the length of a generated LLM response, you can either set a max token limit in the configuration or explicitly request a specific length in your prompt. For example:

"Explain quantum physics in a tweet length message."

Use variables in prompts

To reuse prompts and make it more dynamic use variables in the prompt, which can be changed for different inputs. E.g. as shown in Table 20, a prompt which gives facts about a city. Instead of hardcoding the city name in the prompt, use a variable. Variables can save you time and effort by allowing you to avoid repeating yourself. If you need to use the same piece of information in multiple prompts, you can store it in a variable and then reference that variable in each prompt. This makes a lot of sense when integrating prompts into your own applications.

Table 20. Using variables in prompts

| Section | Content |

|---|---|

| Variables | {city} = "Amsterdam" |

| Prompt | You are a travel guide. Tell me a fact about the city: {city} |

| Output | Amsterdam is a beautiful city full of canals, bridges, and narrow streets. It's a great place to visit for its rich history, culture, and nightlife. |

Experiment with input formats and writing styles

Different models, model configurations, prompt formats, word choices, and submits can yield different results. Therefore, it's important to experiment with prompt attributes like the style, the word choice, and the type prompt (zero shot, few shot, system prompt).

For example a prompt with the goal to generate text about the revolutionary video game console Sega Dreamcast, can be formulated as a question, a statement or an instruction, resulting in different outputs:

- Question: What was the Sega Dreamcast and why was it such a revolutionary console?

- Statement: The Sega Dreamcast was a sixth-generation video game console released by Sega in 1999. It...

- Instruction: Write a single paragraph that describes the Sega Dreamcast console and explains why it was so revolutionary.

For few-shot prompting with classification tasks, mix up the classes

Generally speaking, the order of your few-shots examples should not matter much. However, when doing classification tasks, make sure you mix up the possible response classes in the few shot examples. This is because you might otherwise be overfitting to the specific order of the examples. By mixing up the possible response classes, you can ensure that the model is learning to identify the key features of each class, rather than simply memorizing the order of the examples. This will lead to more robust and generalizable performance on unseen data.

A good rule of thumb is to start with 6 few shot examples and start testing the accuracy from there.

Adapt to model updates

It’s important for you to stay on top of model architecture changes, added data, and capabilities. Try out newer model versions and adjust your prompts to better leverage new model features. Tools like Vertex AI Studio are great to store, test, and document the various versions of your prompt.

Experiment with output formats

Besides the prompt input format, consider experimenting with the output format. For non-creative tasks like extracting, selecting, parsing, ordering, ranking, or categorizing data try having your output returned in a structured format like JSON or XML.

There are some benefits in returning JSON objects from a prompt that extracts data. In a real-world application I don’t need to manually create this JSON format, I can already return the data in a sorted order (very handy when working with datetime objects), but most importantly, by prompting for a JSON format it forces the model to create a structure and limit hallucinations.

In summary, benefits of using JSON for your output:

- Returns always in the same style

- Focus on the data you want to receive

- Less chance for hallucinations

- Make it relationship aware

- You get data types

- You can sort it

Table 4 in the few-shot prompting section shows an example on how to return structured output.

JSON Repair

While returning data in JSON format offers numerous advantages, it's not without its drawbacks. The structured nature of JSON, while beneficial for parsing and use in applications, requires significantly more tokens than plain text, leading to increased processing time and higher costs. Furthermore, JSON's verbosity can easily consume the entire output window, becoming especially problematic when the generation is abruptly cut off due to token limits. This truncation often results in invalid JSON, missing crucial closing braces or brackets, rendering the output unusable. Fortunately, tools like the json-repair library (available on PyPI) can be invaluable in these situations. This library intelligently attempts to automatically fix incomplete or malformed JSON objects, making it a crucial ally when working with LLM-generated JSON, especially when dealing with potential truncation issues.

Working with Schemas

Using structured JSON as an output is a great solution, as we've seen multiple times in this paper. But what about input? While JSON is excellent for structuring the output the LLM generates, it can also be incredibly useful for structuring the input you provide. This is where JSON Schemas come into play. A JSON Schema defines the expected structure and data types of your JSON input. By providing a schema, you give the LLM a clear blueprint of the data it should expect, helping it focus its attention on the relevant information and reducing the risk of misinterpreting the input. Furthermore, schemas can help establish relationships between different pieces of data and even make the LLM “time-aware" by including date or timestamp fields with specific formats.

Here's a simple example: